Microsoft Says It Closed Loophole That Allowed Taylor Swift AI Porn to Be Created

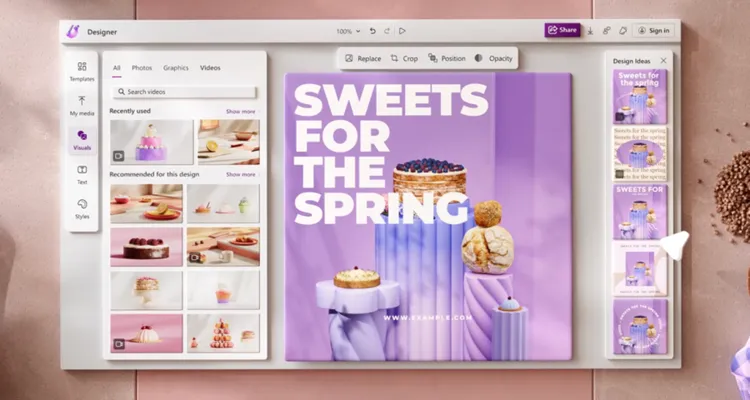

Photo Credit: Microsoft

After explicit AI-generated images featuring Taylor Swift’s face swept across social media, Microsoft says it fixed the ‘loophole’ that allowed their creation in the first place.

Last week reporting suggested that the Microsoft Designer tool was integral in the creation of these images. Microsoft Designer is an AI text-to-image generator that’s intended to make it easier to create social media marketing in image form. However, Telegram group chats were using the service to create nonconsensual, sexually explicit material of celebrities—with the Taylor Swift images going viral.

X/Twitter spent most of this weekend blocking searches for Taylor Swift as it worked to scrub the images. 404 Media reported that the images came from 4chan before they were uploaded to Twitter, which got them from the Telegram channel. “We are investigating these reports and are taking appropriate action to address them,” a Microsoft spokesperson told 404 Media in an email.

The 4chan thread where the images were originally shared outside of the Telegram group also included instructions for ‘jailbreaking’ Microsoft Designer. Jailbreaking an AI image generator means getting the generator to jump its guardrails and do things it has been programmed not to do, like create explicit images or share the recipe for creating napalm.

So how did these jailbreaks work? Did they inject code? Did they hijack the AI’s guidelines? Nope. Each of these AI jailbreaks is just clever word structure to get the AI generator to bypass guidelines put in place for its use.

In this case, the phrase ‘Taylor Swift’ would not generate the images, but ‘Taylor ‘singer’ Swift’ was enough to get the point across to the AI without flagging its guidelines that equate this new string of information as the same as ‘Taylor Swift.’ (It’s a clever language processing problem that AI guidelines will struggle to reign in—because humans are endlessly creative with how they use language.)

This was the loophole that Microsoft has fixed, so the AI generator that powers Designer will not treat the ‘Taylor ‘singer’ Swift’ string any differently than the ‘Taylor Swift’ string when input with other instructions. Meanwhile, ‘prompt engineers’ on 4cchan claim to have found additional ways around the guardrails Microsoft is working feverishly to put into place.

“I think we all benefit when the online world is a safe world,” Satya Nadella said when asked about the Taylor Swift deepfakes. “I don’t think anyone would want an online world that is completely not safe for both content creators and content consumers. So therefore, I think it behooves us to move fast on this.”

Move fast and break things, right Silicon Valley?

Link to the source article – https://www.digitalmusicnews.com/2024/02/01/taylor-swift-ai-porn-loophole/

Recommended for you

-

Civil Era Solid Copper Bugle With Beautiful Rope US Military Cavalry Horn Handmade

$59,00 Buy From Amazon -

Regiment WI-800-BG Bugle

$109,95 Buy From Amazon -

LUTER 10Pcs Drum Dampener Gels, Soft Clear Oval and Long Drum Silencers Sound Dampening Pads Tone Control for Drum Head Cymbal

$7,69 Buy From Amazon -

Boss FS-6 Dual Foot Switch

$76,99 Buy From Amazon -

EASTROCK Kids Drum Set, 3 Piece 14” Junior Drum sets for Drummer,Beginner, Drum Set with Adjustable Throne,Cymbal,Pedal,Drumsticks (Dark Blue)

$89,99 Buy From Amazon -

AnNafi® Bugle | Brand New Tuneable Army Military Bugle Nickel Plated With Free Hard Case + M/P |US Military Cavalry Horn Boy Scout Bugle |Musical Instrument & Home Classic Style Retro Horn Bugle

$89,98 Buy From Amazon -

DJI Mic (2 TX + 1 RX + Charging Case), Wireless Lavalier Microphone, 250m (820 ft.) Range, 15-Hour Battery, Noise Cancellation, Wireless Microphone for PC, iPhone, Andriod, Record Interview, Vlogs

$249,00 Buy From Amazon -

BLUES GUITAR REAL – Large Perfect WAVE samples/loops studio Library on DVD or download

$19,99 Buy From Amazon

Responses